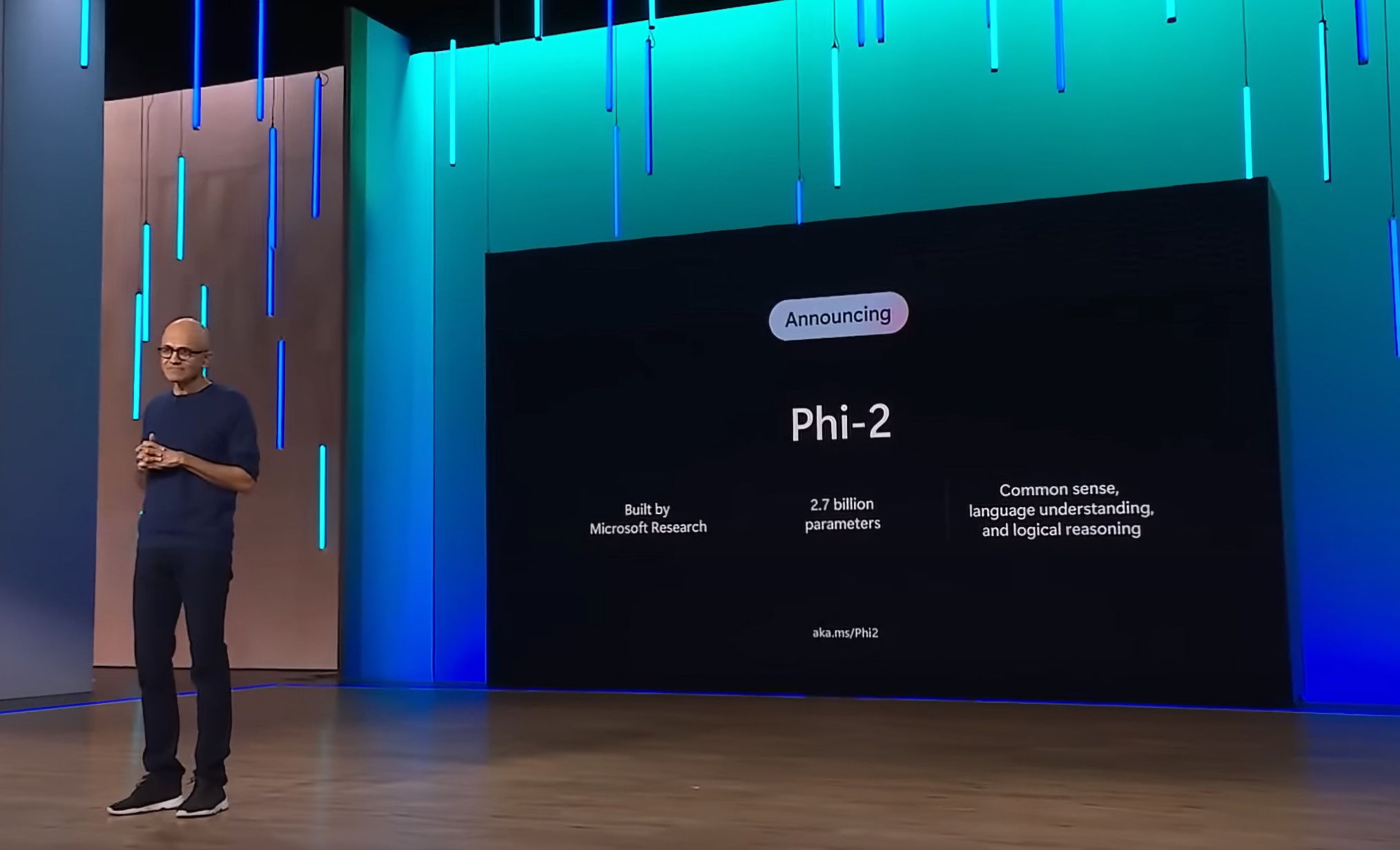

Microsoft Reveals New 2.7 Billion Parameter Language Model: Phi-2

Microsoft’s Phi-2 Model: The Surprising Power of Small Language Models

Overview

Microsoft’s Phi-2 is a 2.7 billion-parameter language model that demonstrates exceptional reasoning and language understanding capabilities. It sets a new bar for performance among base language models with less than 13 billion parameters, outperforming larger models up to 25 times its size.

Innovations

Phi-2 builds upon the success of its predecessors, Phi-1 and Phi-1.5, and introduces innovations in model scaling and training data curation. Its compact size makes it an ideal playground for researchers to explore mechanistic interpretability, safety improvements, and fine-tuning experimentation.

Key Aspects

- Training Data Quality: Phi-2 leverages “textbook-quality” data, including synthetic datasets designed to impart common-sense reasoning and general knowledge, as well as carefully selected web data filtered based on educational value and content quality.

- Innovative Scaling Techniques: Microsoft adopts techniques to scale up Phi-2 from its predecessor, Phi-1.5, accelerating training convergence and boosting benchmark scores.

Performance Evaluation

Phi-2 undergoes rigorous evaluation across various benchmarks, showcasing its capabilities in Big Bench Hard, commonsense reasoning, language understanding, math, and coding tasks. It outperforms larger models, such as Mistral and Llama-2, and matches or outperforms Google’s Gemini Nano 2.

Real-World Scenarios

Phi-2’s capabilities are demonstrated through real-world tests involving prompts commonly used in the research community, revealing its prowess in solving physics problems and correcting student mistakes.

Training Data and Process

Phi-2 is a Transformer-based model trained on 1.4 trillion tokens from synthetic and web datasets. The training process utilizes 96 A100 GPUs over 14 days focusing on maintaining a high level of safety and surpassing open-source models in terms of toxicity and bias.

Conclusion

With the launch of Phi-2, Microsoft continues to expand the capabilities of smaller base language models. The model’s exceptional performance and versatility open new avenues for research and applications in artificial intelligence.

Tags

ai, artificial intelligence, benchmark, comparison, language model, microsoft, Model, phi 2, phi-2

References

Microsoft’s 2.7 billion-parameter model Phi-2